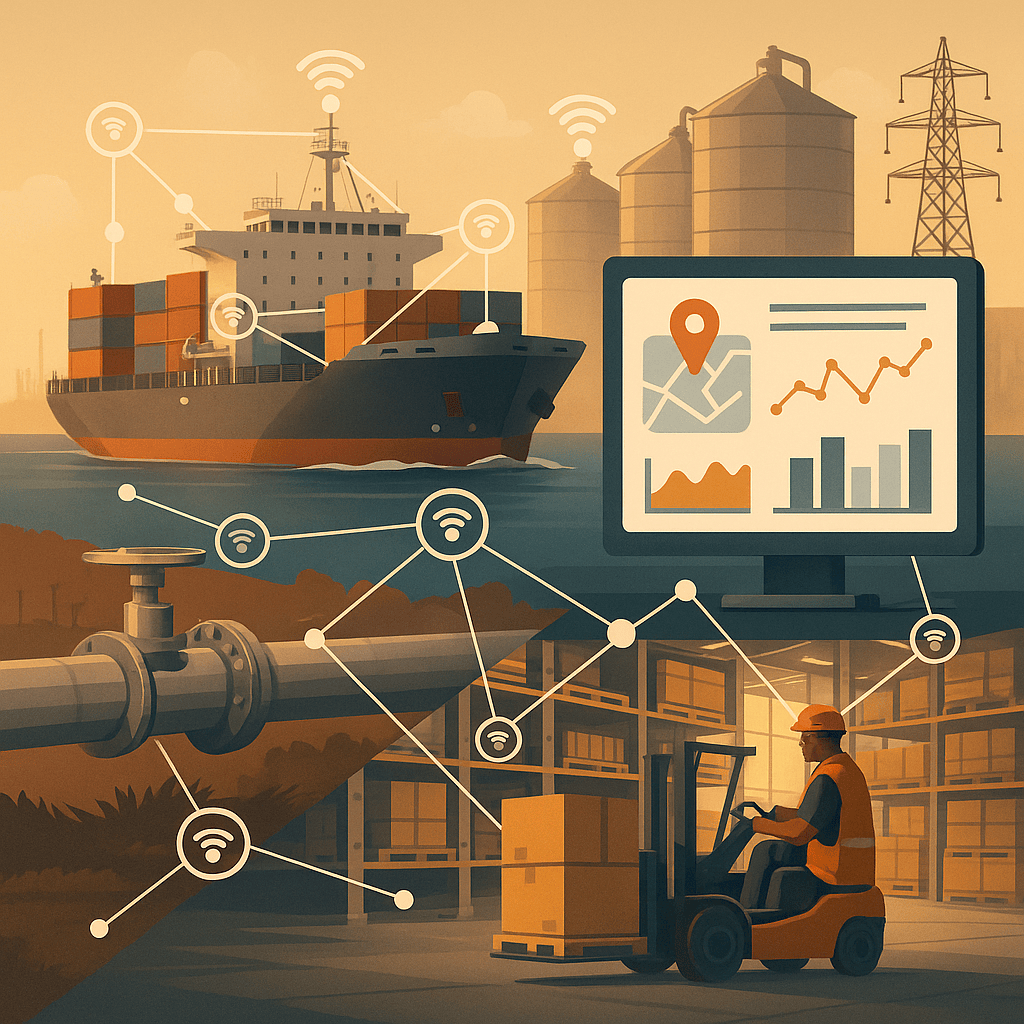

Commodity supply chains are complex networks of vessels, pipelines, warehouses, and trading hubs. Small delays or disruptions can create ripple effects that impact profitability and market positions. Real-time IoT data integration is becoming a game-changer for CIOs who want to give their firms better visibility and control.

IoT devices generate vast amounts of data. Sensors on ships can report location and cargo conditions, pipelines can send pressure readings, and warehouses can track inventory in real time. When this data is integrated with trading systems, firms gain the ability to anticipate disruptions, optimize logistics, and improve risk management.

Databricks provides the processing power to handle these large streams of IoT data, while Snowflake offers a secure and governed environment for analytics. Python enables fast development of ingestion scripts and machine learning models that detect anomalies. Integration with .NET-based CTRM systems ensures trading desks have access to the latest supply chain insights.

The challenge lies in execution. Real-time IoT data pipelines require cloud-native infrastructure, secure APIs, and resilient deployments in Azure and Kubernetes. Few internal IT teams have the capacity to design and maintain these systems while also supporting daily trading operations.

Staff augmentation provides CIOs with immediate expertise. External engineers can build streaming data pipelines, configure real-time dashboards, and connect IoT feeds to existing CTRM systems. This allows firms to deploy solutions faster while reducing risk and ensuring compliance.

Real-time IoT integration is no longer optional for firms that want to remain competitive. With staff augmentation, CIOs can unlock supply chain visibility, improve resilience, and give traders the insights they need to respond to global events as they happen.